In the wake of Cambridge Analytica, data misappropriation, #deletefacebook, calls for regulation and pending testimony to U.S. Congress, Facebook announced a series of initiatives to restrict data access and also a renewed selfie awareness to focus efforts on protecting people on the platform. What’s more notable however is that Mark Zuckerberg also hosted a last-minute, rare town hall with media and analysts to explain these efforts and also take tough questions for the better part of an hour.

Let’s start with the company’s news on data restrictions.

To better protect Facebook user information, the company is making the following changes across nine priority areas over the coming months (Sourced from Facebook):

Events API: Until today, people could grant an app permission to get information about events they host or attend, including private events. Doing so allowed users to add Facebook Events to calendar, ticketing or other apps. According to the company, Facebook Events carry information about other people’s attendance as well as posts on the event wall. As of today, apps using the API can no longer access the guest list or posts on the event wall.

Groups API: Currently apps need permission of a group admin or member to access group content for closed groups. For secret groups, apps need the permission of an admin. However, groups contain information about people and conversations and Facebook wants to make sure everything is protected. Moving forward, all third-party apps using the Groups API will need approval from Facebook and an admin to ensure they benefit the group. Apps will no longer be able to access the member list of a group. Facebook is also removing personal information, such as names and profile photos, attached to posts or comments.

Pages API: Previously, third party apps could use the Pages API to read posts or comments from any Page. Doing so lets developers create tools to help Page owners perform common tasks such as schedule posts and reply to comments or messages. At the same time, it also let apps access more data than necessary. Now, Facebook wants to ensure that Page information is only available to apps providing useful services to our community. All future access to the Pages API will need to be approved by Facebook.

Facebook Login: Two weeks, Facebook announced changes to Facebook Login. As of today, Facebook will need to approve all apps that request access to information such as check-ins, likes, photos, posts, videos, events and groups. Additionally, the company no longer allow apps to ask for access to personal information such as religious or political views, relationship status and details, custom friends lists, education and work history, fitness activity, book reading activity, music listening activity, news reading, video watch activity, and games activity. Soon, Facebook will also remove a developer’s ability to request data people shared with them if there has been no activity on the app in at least three months.

Instagram Platform API: Facebook is accelerating the deprecation of the Instagram Platform API effective today.

Search and Account Recovery: Previously, people could enter a phone number or email address into Facebook search to help find their profiles. According to Facebook, “malicious actors” have abused these features to scrape public profile information by submitting phone numbers or email addresses. Given the scale and sophistication of the activity, Facebook believes most people on Facebook could have had their public profile scraped in this way. This feature is now disabled. Changes are also coming to account recovery to also reduce the risk of scraping.

Call and Text History: Call and text history was part of an opt-in feature for Messenger or Facebook Lite users on Android. Facebook has reviewed this feature to confirm that it does not collect the content of messages. Logs older than one year will be deleted. More so, broader data, such as the time of calls, will no longer be collected.

Data Providers and Partner Categories: Facebook is shuttering Partner Categories, a product that once let third-party data providers offer their targeting directly on Facebook. The company stated that “although this is common industry practice…winding down…will help improve people’s privacy on Facebook”

App Controls: As of April 9th, Facebook display a link at the top of the News Feed for users to see what apps they use and the information they have shared with those apps. Users will also have streamlined access to remove apps that they no longer need. The company will reveal if information may have been improperly shared with Cambridge Analytica.

Cambridge Analytica may have had data from as many as 87 million people

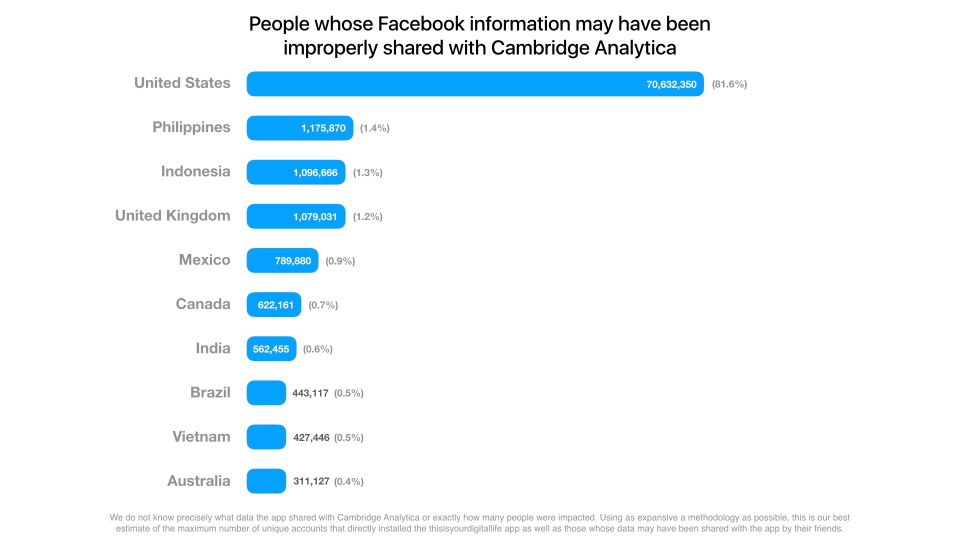

Facebook also made a startling announcement. After thorough review, the company believes that Cambridge Analytica may have collected information on as many as 87 million people. 81.6% of these users resided in the United Sates with the rest of the affected users scattered across the Philippines, Indonesia, United Kingdom, Mexico, Canada, India, among others. Original reports from the New York Times estimated that the number of affected users was closer to 50 million.

Mark Zuckerberg Faces the Media; Shows Maturity and Also Naiveté and Strategic Acumen

In a rare move, Mark Zuckerberg invited press and analysts to a next-day call where he shared details on the company’s latest moves to protect user data, improve the integrity of information shared on the platform and protect users from misinformation. I have to give Mark credit. After initially going AWOL following the Cambridge Analytic data SNAFU, he’s been on a whirlwind media tour. He genuinely seems to want us to know he made mistakes, that he’s learning from them and that he’s trying to do the right thing. On our call, he stayed on beyond his allotted time to answer tough questions for the better part of 60 minutes.

From the onset, Mark approached the discussion by acknowledging that he and the rest of Facebook hadn’t done enough to date to prevent its latest fiasco nor had it done enough to not protect user trust.

“It’s clear now that we didn’t do enough in preventing abuse…that goes for fake news, foreign interference, elections, hate speech, in addition to developers and data privacy,” Zuckerberg stated. “We didn’t take a broad enough view what our responsibility is. It was my fault.”

He further pledged to right wrong while focusing on protecting user data and ultimately their Facebook experience.

“It’s not enough to just connect people. We have to make sure those connections are positive and that they’re bringing people closer together,” he said. “It’s not enough to give people a voice. We have to make sure that people aren’t using that voice to hurt people or spread disinformation. And it’s not enough to give people tools to manage apps. We have to ensure that all of those developers protect people’s information too. We have to ensure that everyone in our ecosystem protects information.”

Zuckerberg admitted that protecting data is just one piece of the company’s multi-faceted strategy to get the platform back on track. Misinformation, security issues and user-driven polarization still threaten facts, truth and upcoming elections.

He shared some of the big steps Facebook is taking to combat these issues. “Yesterday we took a big action by taking down Russian IRA pages,” he boasted. “Since we became aware of this activity…we’ve been working to root out the IRA to protect the integrity of elections around the world. All in, we now have about 15,000 people working on security and content review and we’ll have more than 20,000 by the end of this year. This is going to be a major focus for us.”

He added, “While we’ve been doing this, we’ve also been tracing back and identifying this network of fake accounts the IRA has been using so we can work to remove them from Facebook entirely. This is the first action that we’ve taken against the IRA and Russia itself. And it included identifying and taking down a Russian news organization. We have more work to do here.”

Highlights, Observations and Findings

This conversation was pretty dense. In fact, it took hours to pour over the conversation just to put this article together. I understand if you don’t have time to read through the entire interview or listen to the full Q&A. To help, I’ve some of the highlights, insights and takeaways from our hour together.

1. Mark Zuckerberg wants you to know that he’s very sorry. He articulated on several occasions that he feels the weight of his mistakes, mischaracterizations and gross misjudgments on everything…user data, fake news, election tampering, polarization, data scraping, and user trust. He also wants you to know that he’s learning from his mistakes and his priority is fixing these problems while regaining trust moving forward. He sees this as a multi-year strategy of which Facebook is already one year underway.

2. Facebook now believes that up to 87 million users, not 50, mostly in the US, may have been affected by Kogan’s personality quiz app. Facebook does not know the extent of which user data was sold to or used by Cambridge Analytica. This was not a data breach according to the company. People willingly took Kogan’s quiz.

3. Facebook has also potentially exposed millions of user profiles to data scraping due to existing API standards on other fronts over the years. Users who didn’t disable email/phone number search should assume their public info was scraped by sophisticated actors who evaded rate limiting. The extent of this scraping and how data was used by third parties is unknown. Facebook has since turned off access. Even still, it is unacceptable that it wasn’t taken seriously before and that we are now just hearing about this. Facebook must own its part in exposing data to bad actors who scraped information for nefarious purposes. Facebook’s role in not realizing how bad actors were gaming the system make the company a bad actor in its own right. Ignorance is bliss until it’s not.

4. Mark believes that Facebook hasn’t done a good enough job explaining user privacy, how the company makes money and how it does and doesn’t use user content/data. This is changing.

5. Mark, and the board/shareholders, believe, he’s still the right person for the job. Two reporters asked directly whether he’d step down by force or choice. His answer was an emphatic, “no.” His rationale is that this is his ship and he is the one who’s going to fix everything. He stated on several occasions that he wants to do the right thing. While I applaud his “awakening,” he has made some huge missteps as a leader that need more than promises to rectify. I still believe that Facebook would benefit from seasoned, strategic leadership to establish/renew a social contract with users, Facebook and its partners. The company is after all, fighting wars on multiple fronts. And the company has demonstrated a pattern of either negligence or ignorance in the past and then apologizing afterward. One can assume that this pattern will only continue.

6. There’s still a fair amount naïveté in play here when it comes to user trust, data and weaponizing information against Facebook users. Even though the company is aiming to right its wrongs, there’s more that lies ahead that the company and its key players cannot see yet. There’s a history of missing significant events here. And, Mark has a history of downplaying these events, acting too late and apologizing after the fact. “I didn’t know” is not a suitable response. Even though the company is making important strides, there’s nothing to make me believe that sophisticated data thieves, information terrorists and shape-shifting scammers aren’t already a step or two ahead of the Facebook team. Remember, following the 2016 election, Mark said it was “crazy” that fake news could somehow sway an election. He’s since recanted that reaction, but it was still his initial response and belief.

7. Facebook is already taking action against economic actors, government interference and lack of truthfulness and promises to do more. Its since removed thousands of Russian IRA accounts. Russia has responded that Facebook’s moves are considered “censorship.”

8. Not everything is Facebook’s fault, according to Facebook. Mark places some of the onus of responsibility on Facebook users who didn’t read the ToS, manage their data settings or fully understand what happens when you put your entire life online. In his view, and it’s a tough pill to swallow, no one forced users to take a personality quiz. No one is forcing people to share every aspect of their life online. While the company is making it easier for users to understand what they’re signing up for and how to manage what they share, people still don’t realize that with this free service comes an agreement that as a user, they are the product and their attention is for sale.

9. Moving forward, Facebook isn’t as worried about data breaches as it is about user manipulation and psyops. According to Mark, users are more likely susceptible to “social engineering” threats over hacking and break-ins. Social engineering is the use of centralized planning and coordinated efforts to manipulate individuals to divulge information for fraudulent purposes. This can also be aimed at manipulating individual perspectives, behaviors and also influencing social change (for better or for worse.) Users aren’t prepared to fully understand if, when and how they’re succeptible to manipulation and I’d argue that research needs to be done in understanding how we’re influencing one another based on our own cognitive biases and how we choose to share and perceive information in real-time.

10. Facebook really wants you to know that it doesn’t sell user data to advertisers. But, it also acknowledges that it could have done and will do a better job in helping users understand Facebook’s business model. Mark said that users want “better ads” and “better experiences.” In addition to fighting information wars, Facebook is also prioritizing ad targeting, better news feeds, and the creation/delivery of better products and services that users love.

11. Even though upwards of 87 million users may have been affected by Kogan’s personality quiz and some of that user information was sold to and used by Cambridge Analytica, and that user data was also compromised in many other ways for years, the #deletefacebook movement had zero meaningful impact. But still, Mark says that the fact the movement even gained any momentum is “not good.” This leads to a separate but related conversation about user addictiveness and dependency on these platforms that kill movements such as #deletefacebook before they gain momentum.

12. Users cannot rely on Facebook, Youtube, Twitter, Reddit, et al., to protect them. Respective leaders of each of these platforms MUST fight bad actors to protect users. At the same time, they are not doing enough. Users are in many ways, unwitting pawns in what amounts to not only social engineering, but full-blown information warfare and psyops to cause chaos, disruption or worse. Make no mistake, people, their minds and their beliefs are under attack. It’s not just the “bad actors.” We are witnessing true villains, regardless of intent, damage, abuse and undermine human relationships, truth and digital and real-world democracy.

13. People and their relationships with one another are being radicalized and weaponized right under their noses. No one is teaching people how this even happens. More so, we are still not exposing the secrets of social design that makes these apps and services addictive. In the face of social disorder, people ar still readily sharing everything about themselves online and believe they are in control of their own experiences, situational analyses and resulting emotions. I don’t know that people could really walk away even if they wanted to and that’s what scares me the most. Regulation is coming.

Q&A in Full: The Whole Story According to Zuckerberg

Please note that this call was 60 minutes long and what follows is not a complete transcript. I went through the entire conversation to surface key points and context.

David McCabe, Axios: “Given the numbers [around the IRA] have changed so drastically, Why should lawmakers and why should users trust that you’re giving them a full and accurate picture now?”

Zuckerberg: “There is going to be more content that we’re going to find over time. As long as there are people employed in Russia who have the job of trying to find ways to exploit these systems, this is going to be a never-ending battle. You never fully solve security, it’s an arms race. In retrospect, we were behind and we didn’t invest in it upfront. I’m confident that we’re making progress against these adversaries. But they’re very sophisticated. It would be a mistake to assume that you can fully solve a problem like this…”

Rory Cellan-Jones, BBC: “Back in November 2016, dismissed as crazy that fake news could have swung the election. Are you taking this seriously enough…?”

Zuckerberg: “Yes. I clearly made a mistake by just dismissing fake news as crazy as [not] having an impact. What I think is clear at this point, is that it was too flippant. I should never have referred to it as crazy. This is clearly a problem that requires careful work…This is an important area of work for us.”

Ian Sherr, CNET: “You just announced 87 million people affected by Cambridge Analytica, how long have you known this number because the 50 million number has been out there for a while. It feels like the data keeps changing on us and we’re not getting a full forthright view of what’s going on here.”

Zuckerberg: “We only just finalized our understanding of the situation in the last couple of days. We didn’t put out the 50 million number…we wanted to wait until we had a full understanding. Just to give you the complete picture on this, we don’t have logs going back for when exactly [Aleksandr] Kogan’s app queried for everyone’s friends…We wanted to take a broad view and a conservative estimate. I’m quite confident given our analysis, that it is not more than 87 million. It very well could be less…”

David Ingram, Reuters: “…Why weren’t there audits of the use of the social graph API years ago between the 2010 – 2015 period.

Zuckerberg: “In retrospect, I think we should have been doing more all along. Just to speak to how we were thinking about it at the time, as just a matter of explanation, I’m not trying to defend this now…I think our view in a number of aspects of our relationship with people was that our job was to give them tools and that it was largely people’s responsibility in how they chose to use them…I think it was wrong in retrospect to have that limited of a view but the reason why we acted the way that we did was because I think we viewed when someone chose to share their data and then the platform acted in a way that it was designed with the personality quiz app, our view is that, yes, Kogan broke the policies. And, he broke expectations, but also people chose to share that data with them. But today, given what we know, not just about developers, but across all of our tools and just across what our place in society is, it’s such a big service that’s so central in people’s lives, I think we understand that we need to take a broader view of our responsibility. We’re not just building tools that we have to take responsibility for the outcomes in how people use those tools as well. That’s why we didn’t do it at the time. Knowing what I know today, clearly we should have done more and we will going forward.

Cecilia King, NY Times: “Mark, you have indicated that you could be comfortable with some sort of regulation. I’d like to ask you about privacy regulations that are about to take effect in Europe…GDPR. Would you be comfortable with those types of data protection regulation in the U.S. and with global users.”

Zuckerberg: “Regulations like the GDPR are very positive…We intend to make all the same controls and settings everywhere not just Europe.”

Tony Romm, Washington Post: “Do you believe that this [data scraping] was all in violation of your 2011 settlement with the FTC?”

Zuckerberg: “We’ve worked hard to make sure that we comply with it. The reality here is that we have to take a broader view of our responsibility, rather than just legal responsibility. We’re focused on doing the right thing and making sure people’s information is protected. We’re doing investigations, we’re locking down the platform, etc. I think our responsibilities to the people who use Facebook are greater than what’s written in that order and that’s the standard that I want to hold us to.”

Hannah Kuchler, Financial Times, “Investors have raised a lot of concerns about whether this is the result of corporate governance issues at Facebook. Has the board discussed whether you should step down as chairman?”

Zuckerberg: “Ahhh, not that I’m aware of.”

Alexis Madrigal, Atlantic: “Have you ever made a decision that benefitted Facebook’s business but not the community.”

Zuckerberg: “The thing that makes our product challenging to manage and operate are not the trade offs between people and the business, I actually think that those are quite easy, because over the long term the business will be better if you serve people. I just think it would be near sighted to focus on short term revenue over what value to people is and I don’t think we’re that short-sighted. All of the hard decisions we have to make are actually trade-offs between people. One of the big differences between the type of product we’re building, which is why I refer to it as a community and what do I think some of the specific governance issues we have are that different people who use Facebook have different interests. Some people want to share political speech that they think is valid, while others think it’s hate speech. These are real values questions and trade-offs between free-expression on one hand and making sure it’s a safe community on the other hand…we’re doing that in an environment that’s static. The social norms are changing continually and they’re different in every country around the world. Getting those trade-offs right is hard and we certainly don’t always get them right.”

Alyssa Newcomb, NBC News: “You’ve said that you’ve clearly made mistakes in the past. Do you still think you’re the best person to run Facebook moving forward?”

Zuckerberg: “Yes. I think life is about learning from the mistakes and figuring out what you need to do to move forward. The reality is that when you’re building something like Facebook that is unprecedented in the world, there are going to be things that you mess up…I don’t think anyone is going to be perfect. I think that what people can hold us accountable for is learning from the mistakes and continually doing better and continuing to evolve what our view of our responsibility is. And, at the end of the day, whether we’re building things that people like and if it makes their lives better. I think it’s important not to lose sight of that through all of this. I’m the first to admit that we didn’t take a broad enough view of what our responsibilities were. I also think it’s important to keep in mind that there are billions of people who love the services that we’re building because they’re getting real value…That’s something that I’m really proud of my company for doing…”

Josh Constine, TechCrunch: “Facebook explained that the account recovery and search tools using emails and phone numbers could have been used to scrape information about all of Facebook users, when did Facebook find out about this scraping operation and if that was before a month ago, why didn’t Facebook inform the public about it immediately?”

Zuckerberg: “We looked into this and understood it more over the last few days as part of the audit of our overall system. Everyone has a setting on Facebook that controls, it’s right in your privacy settings, whether people can look you up by your contact information. Most people have that turned on and that’s the default. A lot of people have also turned it off. It’s not quite everyone. Certainly, the potential here would be that over the period of time this feature has been around, people have been able to scrape public information. It is reasonable to expect that, if you had that setting turned on, that at some point over the last several years, someone has probably accessed your public information in this way.”

Will Oremus, Slate: “You run a company that relies on people who are willing to share data that is then used to target them with ads. We also now know it can be used to manipulate ways or ways they don’t expect. We also know that you are very protective of your own privacy in certain ways. You acknowledged you put tape over your webcam at one point. I think you bought the lot around one of your homes to get more privacy. What other steps do you take to protect your privacy online? As a user of Facebook, would you sign up for apps like the personality quiz?”

Zuckerberg: “I certainly use a lot of apps. I’m a power user of the internet. In order to protect privacy, I would advise that people follow a lot of the best practices around security. Turn on two-factor authentication. Change your passwords regularly. Don’t have password recovery tools be information that you make publicly available…look out and understand that most of the attacks are going to be social engineering and not necessary people trying to break into security systems. For Facebook specifically, I think one of the things we need to do…are just the privacy controls that you already have. Especially leading up to the GDPR event, people are going to ask if we’re going to implement all of those things. My answer to that is, we’ve had almost all of what’s implemented in there for years…the fact that most people are not aware of that is an issue. We need to do a better job of putting those tools in front of people and not just offering them. I would encourage people to use them and make sure they’re comfortable how their information is used on our systems and others.”

Sarah Frier, Bloomberg: “There’s broad concern that user data given years ago could be anywhere by now. What results do you hope to achieve from the audits and what won’t you be able to find?”

Zuckerberg: “No measure you take on security is going to be perfect. But, a lot of the strategy has to involve changing the economics of potential bad actors to make it not worth doing what they might do otherwise. We’re not going to be able to go out and find every bad use of data. What we can do is make it a lot harder for folks to do that moving forward, change the calculus on anyone who’s considering doing something sketchy going forward, and I actually do think we’ll eventually be able to uncover a large amount of bad activity of what exists and we will be able to go in and do audits to make sure people get rid of that data.”

Steve Kovach, Business Insider: “Has anyone been fired related to the Cambridge Analytica issue or any data privacy issue?”

Zuckerberg: “I have not. I think we’re still working through this. At the end of the day, this is my responsibility. I started this place. I run it. I’m responsible for what happens here. I’m going to do the best job helping to run it going forward. I’m not looking to throw anyone else under the bus for mistakes that we made here.”

Nancy Cortez, CBS News: “Your critics say Facebook’s business model depends on harvesting personal data, so how can you reassure users that their information isn’t going to be used in a way that they don’t expect?”

Zuckerberg: “I think we can do a better job of explaining what we actually do. There are many misperceptions around what we do that I think we haven’t succeeded in clearing up for years. First, the vast majority of the data that Facebook knows about you is because you chose to share it. It’s not tracking…we don’t track and we don’t buy and sell [data]…In terms of ad activity, that’s a relatively smaller part of what we’re doing. The majority of the activity is people actually sharing information on Facebook, which is why I think people understand how much content is there because they put all the photos and information there themselves. For some reason, we haven’t been able to kick this notion for years, that we sell data to advertisers. We don’t. It just goes counter to our own incentives…We can certainly do a better job of trying to explain this and make these things understandable. The reality is that the way we run the service is, people share information, we use that to help people connect and to make the services better, and we run ads to make it a free service that everyone in the world can afford.”

Rebecca Jarvis, ABC News: “Cambridge Analytica has tweeted now since this conversation began, ‘When Facebook contacted us to let us know the data had been improperly obtained, we immediately deleted the raw data from our file server, and began the process of searching for and removing any of its derivatives in our system.’ Now that you have this finalized understanding, do you agree with Cambridge Analytica’s interpretation in this tweet and will Facebook be pursuing legal action against them?”

Zuckerberg: “I don’t think what we announced today is connected to what they just said at all. What we announced with the 87 million is the maximum number of people that we could calculate could have been accessed. We don’t know how many people’s information Kogan actually got. We don’t know what he sold to Cambridge Analytica. We don’t today what they have in their system. What we have said and what they agreed to is to do a full forensic audit of their systems so we can get those answers. But at the same time, the UK government and the ICO are doing a government interpretation and that takes precedence. We’ve stood down temporarily…and once that’s down, we’ll resume ours so we can get answers to the questions you’re asking and ultimately make sure that not of the data persists or is being used improperly. At that point, if it makes sense, we will take legal action, if we need to do that to protect people’s information.

Alex Kantrowitz, Buzzfeed, “Facebook’s so good at making money. I wonder if your problems could somewhat be mitigated if company didn’t try to make so much. You could still run Facebook as a free service, but collect significantly less data and offer significantly less ad targeting…so, I wonder if that would put you and society and less risk.”

Zuckerberg: “People tell us that if they’re going to see ads, they want the ads to be good. The way the ads are good is making it so that when someone tells us they have an interest…that the ads are actually relevant to what they care about. Like most of the hard decisions that we make, this is one where there’s a trade-off between values people really care about. On the one hand, people want relevant experiences and on the other hand, I do think that there’s some discomfort how data is used in systems like ads. I think the feedback is overwhelmingly on the side of wanting a better experience…”

Nancy Scola, Politico, “When you became aware in 2015 that Cambridge Analytica inappropriately accessed this Facebook data, did you know that firm’s role in American politics and in Republican politics in particular?”

Zuckerberg: “I certainly didn’t. One of the things in retrospect…people ask, ‘why didn’t you ban them back then?’ We banned Kogan’s app from the platform back then. Why didn’t we do that? It turns out, in our understanding of the situation, that they weren’t any of Facebook’s services back then. They weren’t an advertiser, although they went on to become one in the 2016 election. They weren’t administering tools and they didn’t build an app directly. They were not really a player we had been paying attention to.”

Carlos Hernandez, Expansion: “Mark, you mentioned that one of the main important aspects of Facebook is the people. And, one of the biggest things around the use of these social platforms is the complexity of users understanding how these companies store data and use their information. With everything that is happening, how can you help users learn better how Facebook, What’s App and Instagram is collecting and using data?”

Zuckerberg: “I think we need to do a better job of explaining the principles that the service operates under. But, the main principles are, you have control of everything you put on the service, most of the content that Facebook knows about you is because you chose to share that content with friends and put it on your profile and we’re going to use data to make the services better…but, we’re never going to sell your information. If we can get to a place where we can communicate that in a way people understand it, then we have a shot at distilling this down to a simpler thing. That’s certainly not something we’ve succeeded at doing historically.

Kurt Wagner, Recode: “There’s been the whole #deletefacebook thing from a couple of weeks ago, there’s been advertisers who have said that they’re pulling advertising money or pull their pages down altogether, I’m wondering if on the back end, have you seen any actual change in usage from users or change in ad buys over the last couple weeks…”

Zuckerberg: “I don’t think there’s been any meaningful impact that we’ve observed. But look, it’s not good. I don’t want anyone to be unhappy with our services or what we do as a company. Even if we can’t measure a change in the usage of the products or the business…it’s still speaks to feeling like this was a massive breach of trust and we have a lot of work to do to repair that.”

Fernando Santillanes, Grupo Milenio: “There’s a lot of concern in Mexico about fake news. Associating with media to identify these fake articles is not enough. What do you say to all the Facebook users who want to see Facebook take a more active Facebook position to detect and suppress fake news?”

Zuckerberg: “This is an important question. 2018 is going to be an important year for protecting important election integrity around the world. Let me talk about how we’re fighting fake news across the board. There are three different types of activity that require different strategies for fighting them. It’s important people understand all of what we’re doing here. The three basic categories are, 1) there are economic actors who are basically spammers, 2) governments trying to interfere in elections, which is basically a security issue and 3) polarization and lack of truthfulness in what you describe as the media.”

In response to economic actors, he explained, “These are folks like the Macedonian trolls. What these folks are doing, it’s just an economic game. It’s not ideological at all. They come up with the most sensational thing they can in order to get you to click on it so they can make money on ads. If we can make it so that the economics stop working for them, then they’ll move on to something else. These are literally the same type of people who have been sending you Viagra emails in the 90s. We can attack it on both sides. On the revenue side, we make it so that they can’t run on the Facebook ad network. On the distribution side, we make it so that as we detect this stuff, it gets less distribution on News Feeds.”

The second category involves national security issues, i.e. Russian election interference. Zuckerberg’s response to solve this problem involves identifying bad actors, “People are setting up these large networks of fake accounts and we need to track that really carefully in order to remove it from Facebook entirely as a security issue.”

The third category is about media, which Zuckerberg believes requires deeper fact checking. “We find that fact checkers can review high volume things to show useful signals and remove from feeds if it’s a hoax. But there’s still a big polarization issue. Even if someone isn’t sharing something that’s false, they are cherry picking facts to tell one side of a story where the aggregate picture ends up not being true. There, the work we need to do is to promote broadly trusted journalism. The folks who, people across society, believe are going to take the full picture and do a fair and thorough job.”

He closed on that topic on an optimistic note, “Those three streams, if we can do a good job on each of those, will make a big dent across the world and that’s basically the roadmap that we’re executing.”

Casey Newton, The Verge: “With respect to some of the measures you’re putting into place to protect election integrity and to reduce fake news…how are you evaluating the effectiveness of the changes you’re making and how will you communicate wins and losses…?”

Zuckerberg: “One of the big things we’re working on now is a major transparency effort to be able to share the prevalence of different types of bad content. One of the big issues that we see is a lot of the debate around fake news or hate speech happens through anecdotes. People see something that’s bad and shouldn’t be allowed on the service and they call us out on it, and frankly they’re right, it shouldn’t be there and we should do a better job of taking that down. But, what think is missing from the debate today are the prevalence of these different categories of bad content. Whether it’s fake news and all the different kinds there in, hate speech, bullying, terror content, all of things that I think we can all agree are bad and we want to drive down, the most important thing there is to make sure that the numbers that we put out are accurate. We wouldn’t be doing anyone a favor by putting out numbers and coming back a quarter later saying, ‘hey, we messed this up.’ Part of transparency is to inform the public debate and build trust. If we have to go back and restate those because we got it wrong, the calculation internally is that it’s much better to take a little longer to make sure we’re accurate than to put something out that might be wrong. We should be held accountable and measured by the public. It will help create more informed debate. And, my hope over time is that the playbook and scorecard that we put out will also be followed by other internet platforms so that way there can be a standard measure across the industry. “

Barbara Ortutay, Associated Press, “What are you doing differently now to prevent things from happening and not just respond after the fact?

Zuckerberg: “Going forward, a lot of the new product development has already internalized this perspective of the broader responsibility we’re trying to take to make sure our tools are used well. Right now, if you take the election integrity work, in 2016 we were behind where we wanted to be. We had a more traditional view of the security threats. We expected Russia and other countries to try phishing and other security exploits, but not necessarily the misinformation campaign that they did. We were behind. That was a really big miss. We want to make sure we’re not behind again. We’ve been proactively developing AI tools to detect trolls who are spreading fake news or foreign interference…we were able to take down thousands of fake accounts. We’re making progress. It’s not that there’s no bad content out there. I don’t want to ever promise that we’re going to find everything…we need to strengthen our systems. Across the different products that we are building, we are starting to internalize a lot more that we have this broader responsibility. The last thing that I’ll say on this, I wish I could snap my fingers and in six months, we’ll have solved all of these issues. I think the reality is that given how complex Facebook is, and how many systems there are, and how we need to rethink our relationship with people and our responsibility across every single part of what we do, I do think this is a multiyear effort. It will continue to get better every month.”

As I once said, and believe more today than before, with social media comes great responsibility. While optimism leads to great, and even unprecedented innovation, it can also prevent seeing what lies ahead to thwart looming harm and destruction. Zuckerberg and company have to do more than fix what’s broken. It has to look forward to break and subsequently fix what trolls, hackers and bad actors are already seeking to undermine. And it’s not just Facebook. Youtube, Google, Instagram, Reddit, 4/8Chan et al., have to collaborate and coordinate massive efforts to protect users, suppress fake news and promote truth.

Brian Solis

Brian Solis is principal analyst and futurist at Altimeter, the digital analyst group at Prophet, Brian is world renowned keynote speaker and 7x best-selling author. His latest book, X: Where Business Meets Design, explores the future of brand and customer engagement through experience design. Invite him to speak at your event or bring him in to inspire and change executive mindsets.

Connect with Brian!

Twitter: @briansolis

Facebook: TheBrianSolis

LinkedIn: BrianSolis

Instagram: BrianSolis

Youtube: BrianSolisTV

The post If you had one hour with Mark Zuckerberg, what would you ask? Here’s what I learned about the state and future of Facebook, data, politics and bad actors appeared first on Brian Solis.